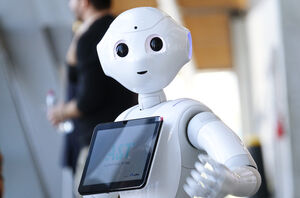

Artificial Intelligence

Campaigners criticise Online Safety Act, citing loopholes on harmful content

The Online Safety Act (OSA) has been criticised for failing to adequately protect young people from harmful content, with campaigners vowing to push for stronger regulations.

Ofcom has defended the new measures, calling them a "significant step" and promising further guidance in 2025.

Ofcom's Illegal Harms Codes of Practice, effective March 2025, mandate platforms to assess and remove illegal material, including terrorism, child sexual abuse, and content promoting suicide.

However, critics argue the Act leaves "loopholes" allowing harmful content to remain online. Adele Walton, an online safety advocate, said, "these rules shield platforms from real accountability. This isn’t the Online Safety Act parents or young people want."

The Molly Rose Foundation expressed disappointment, stating, "we are astonished there is not one single targeted measure for platforms to tackle suicide and self-harm material that meets the criminal threshold."

Dame Melanie Dawes, Ofcom's Chief Executive, defended the measures, stating, "for too long, sites and apps have been unregulated, unaccountable, and unwilling to prioritise people’s safety over profits. That changes from today."

Technology Secretary Peter Kyle called the codes a "significant step," adding, "if platforms fail to step up, the regulator has my backing to use its full powers, including fines and blocking access to sites."

Imran Ahmed of the Centre for Countering Digital Hate welcomed the changes but emphasised more needs to be done, "this is step one. We need Online Safety Act 2.0 to address algorithms, AI, and advertising."

Campaigners remain committed to advocating for stricter protections, urging a focus on closing gaps to better safeguard vulnerable users.

Share