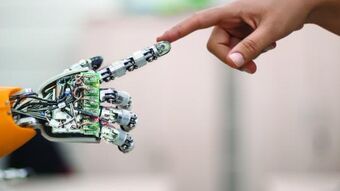

Are we “summoning the demon”?

Our lives have been enormously enriched by the many benefits of the Artificial Intelligence that powers the Internet. From online banking to social media to Skype to Uber to Google and the vast treasury of information at our finger-tips (and basically for free). And never getting lost! My personal favourite: Google Maps making it easy to use the bus and metro systems in strange cities. But it’s not all good.

TESLA boss and all-round tech guru Elon Musk put the problem in memorable terms when back in 2014 he suggested that our greatest “existential threat” was Artificial Intelligence, as it gets smarter and smarter year by year. It’s like trying to control the devil through magic spells, he said. It might not work out well when you “summon the demon.”

And he’s not alone. The following year, scores of experts joined him signing on to an open letter that warned of the potential for AI to go wrong. They included Microsoft founder Bill Gates and the two best-known British scientists of our day: the late Stephen Hawking, Cambridge cosmologist; and Astronomer Royal (and former Master of Trinity College, Cambridge) Lord Rees. No-one could accuse these people of being anti-science, anti-tech, or harbouring Luddite sympathies.

The Musk-Gates-Hawking-Rees letter has helped boost a series of initiatives aimed at ensuring the safe and ethical development of AI, such as Cambridge University’s Centre for the Study of Existential Risk, the Leverhulme Centre for the Future of Intelligence, and the Berkeley Center for Human-Compatible AI. It’s worth checking in on what each of these initiatives is up to.

Someone else who has expressed similar concerns is Kevin Kelly, former editor of Wired magazine and one of the top tech commentators of the past generation, who is also an evangelical Christian. Kelly has called for us to engineer our values into AIs.

Way back in 2008, Congressman Brad Sherman asked the U.S. House of Representatives Science and Technology Committee to fund “non-ambitious AI.” And then he convened a hearing of the Subcommittee on Terrorism, Nonproliferation, and Trade to explore the need for a “non-proliferation” approach to such new technologies (especially those that would “enhance” humans) – that is, to see them like we see nuclear technology as something we need to keep under careful control. He invited me to testify at the hearing. I quoted from the infamous essay by Bill Joy, co-founder of one of the top tech companies of the late 20th century, Sun Microsystems, that was the cover story in Wired for April, 2000 under the title Why the Future Doesn’t Need Us. It’s still well worth a read!

Joy’s argument is basically this: either tech will go wrong and destroy us all, or we’ll use it to “enhance” and re-engineer humans into becoming machines. Either way, tomorrow will not be for the human race but, at best, a race of machines.

I also made the point that it is vital to get these discussions out into public – they are not the special concern of “ethicists” but need to be the general concern of everyone. And our political leaders.

This is slowly happening with AI. But the “existential threat” it poses is hardly the main topic of conversation at Westminster (I’ll be surprised if it comes up in Tory leadership debates). Or in Washington (no Trump tweets on AI yet).

But there are some interesting signs. For the past three or four years, the International Telecommunications Union – a part of the United Nations – has hooked up with the X-Prize Foundation to host a Global Summit on “AI for Good.” I wasn’t able to attend, but the programme for the 2019 event – in May – shows something of the promise and the problem of efforts like this. There was much useful discussion of the value of AI for development, and issues like equity and inclusivity.

But the government panel consisted of three representatives: from Tunisia, Zimbabwe, and the Bahamas.

I’ll believe the international community is getting serious when that panel brings together top people from the governments of the U.S., China, and the EU.

This isn’t just my view. It’s interesting to note that inventor and tech booster Ray Kurzweil, the man who has popularized the idea of the “singularity” and gave the closing Keynote address, didn’t actually bother to go to the conference at all. He gace his speech from his home in California.

Looking ahead - what’s the agenda?

There are several dimensions to the problem raised by Artificial Intelligence.

For one thing, it is handing enormous power to a small number of huge companies who scoop up data about us and use if for business purposes.

It’s also giving more and more power to governments. While every government is making use of digital information about our lives, the Chinese “social credit” system shows where authoritarian regimes can go in controlling the lives of their citizens.

Its implications for weapons systems have attracted widespread concern. Should machines make the decision to kill – with no human supervision?

But back to the core concern: Many people expect a sudden take-off moment when computers become superhumanly intelligent, creating, as my former UnHerd colleague Peter Franklin has put it, a “digital supreme being.” This may happen soon, it may not happen for many years. It may never happen. But the fact that we can’t rule it out means we need to take it very seriously.

Find out more

I discussed some of these issues in an article for the website UnHerd, where you will also find some additional references. And see my CARE book The Robots Are Coming: Us, Them, and God.

Share story

Are we “summoning the demon”?